Hi,

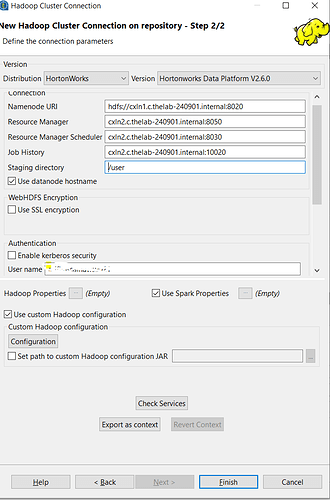

I’m trying to establish connection with Talend BigData Open Studio ETL with CloudX Hadoop cluster.

I was able to fetch cluster details through Ambari to Talend Hadoop connection BUT , in next step, it fails bcs It’s not able to reach the nodes from configurations. It seems, Cloudx Hadoop cluster’s nodes are not accessible from outside. Could you please anyone help me on this?