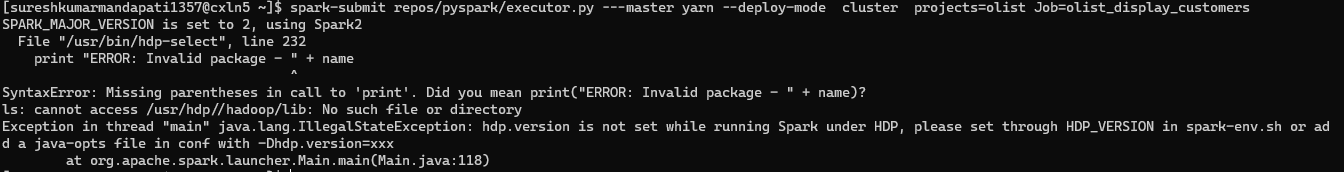

I am getting the below error when submitting the spark job

Did you set your default path to python3? You get this error usually then.

I tested running the same command in a fresh session on your account. It works fine.

attached the image of how I am submitting a pyspark job

below is the error log.

can you please let me know what are the environment variables you are setting

I tried adding these lines too but still fails with the same error

import os

import sys

os.environ[“SPARK_HOME”] = “/usr/spark2.4.3”

os.environ[“PYLIB”] = os.environ[“SPARK_HOME”] + “/python/lib”

In below two lines, use /usr/bin/python2.7 if you want to use Python 2

os.environ[“PYSPARK_PYTHON”] = “/usr/local/anaconda/bin/python”

os.environ[“PYSPARK_DRIVER_PYTHON”] = “/usr/local/anaconda/bin/python”

sys.path.insert(0, os.environ[“PYLIB”] +"/py4j-0.10.7-src.zip")

sys.path.insert(0, os.environ[“PYLIB”] +"/pyspark.zip")

`(base) [sureshkumarmandapati1357@cxln4 ~]$ /usr/spark2.4.3/bin/spark-submit --master local[*] /usr/spark2.4.3/examples/src/main/python/pi.py

21/06/25 10:09:04 INFO spark.SparkContext: Running Spark version 2.4.3

21/06/25 10:09:04 INFO spark.SparkContext: Submitted application: PythonPi

21/06/25 10:09:04 INFO spark.SecurityManager: Changing view acls to: sureshkumarmandapati1357

21/06/25 10:09:04 INFO spark.SecurityManager: Changing modify acls to: sureshkumarmandapati1357

21/06/25 10:09:04 INFO spark.SecurityManager: Changing view acls groups to:

21/06/25 10:09:04 INFO spark.SecurityManager: Changing modify acls groups to:

21/06/25 10:09:04 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(sureshkumarmandapati1357); groups with view permissions: Set(); users with modify permissions: Set(sureshkumarmandapati1357); groups with modify permissions: Set()

21/06/25 10:09:04 INFO util.Utils: Successfully started service ‘sparkDriver’ on port 37224.

21/06/25 10:09:04 INFO spark.SparkEnv: Registering MapOutputTracker

21/06/25 10:09:04 INFO spark.SparkEnv: Registering BlockManagerMaster

21/06/25 10:09:04 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

21/06/25 10:09:04 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

21/06/25 10:09:04 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-a8ea5821-3518-4563-9c6c-e196d38dae5a

21/06/25 10:09:04 INFO memory.MemoryStore: MemoryStore started with capacity 93.3 MB

21/06/25 10:09:04 INFO spark.SparkEnv: Registering OutputCommitCoordinator

21/06/25 10:09:04 INFO util.log: Logging initialized @2947ms

21/06/25 10:09:04 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

21/06/25 10:09:05 INFO server.Server: Started @3067ms

21/06/25 10:09:05 WARN util.Utils: Service ‘SparkUI’ could not bind on port 4040. Attempting port 4041.

21/06/25 10:09:05 INFO server.AbstractConnector: Started ServerConnector@63bacafd{HTTP/1.1,[http/1.1]}{0.0.0.0:4041}

21/06/25 10:09:05 INFO util.Utils: Successfully started service ‘SparkUI’ on port 4041.

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@c90dfeb{/jobs,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@46eeaeca{/jobs/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3acf81a2{/jobs/job,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2ad0c350{/jobs/job/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@19fba03e{/stages,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5f7fe032{/stages/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@51b0a38d{/stages/stage,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@19369c13{/stages/stage/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4dcef873{/stages/pool,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@151d212{/stages/pool/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7cef7431{/storage,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5194b9bb{/storage/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6ba3de9a{/storage/rdd,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@646d50be{/storage/rdd/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@63a4eefe{/environment,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1709ca50{/environment/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@622b9125{/executors,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6ccdd57e{/executors/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@28da1507{/executors/threadDump,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@71d81106{/executors/threadDump/json,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4f4414a1{/static,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3de3281d{/,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@541cefbf{/api,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@c1792a4{/jobs/job/kill,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@27f45fc{/stages/stage/kill,null,AVAILABLE,@Spark}

21/06/25 10:09:05 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://cxln4.c.thelab-240901.internal:4041

21/06/25 10:09:05 INFO executor.Executor: Starting executor ID driver on host localhost

21/06/25 10:09:05 INFO util.Utils: Successfully started service ‘org.apache.spark.network.netty.NettyBlockTransferService’ on port 45896.

21/06/25 10:09:05 INFO netty.NettyBlockTransferService: Server created on cxln4.c.thelab-240901.internal:45896

21/06/25 10:09:05 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

21/06/25 10:09:05 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, cxln4.c.thelab-240901.internal, 45896, None)

21/06/25 10:09:05 INFO storage.BlockManagerMasterEndpoint: Registering block manager cxln4.c.thelab-240901.internal:45896 with 93.3 MB RAM, BlockManagerId(driver, cxln4.c.thelab-240901.internal, 45896, None)

21/06/25 10:09:05 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, cxln4.c.thelab-240901.internal, 45896, None)

21/06/25 10:09:05 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, cxln4.c.thelab-240901.internal, 45896, None)

21/06/25 10:09:05 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@75e5fce{/metrics/json,null,AVAILABLE,@Spark}

21/06/25 10:09:07 INFO scheduler.EventLoggingListener: Logging events to hdfs:/spark2-history/local-1624615745252

21/06/25 10:09:07 INFO internal.SharedState: loading hive config file: file:/usr/spark2.4.3/conf/hive-site.xml

21/06/25 10:09:07 INFO internal.SharedState: spark.sql.warehouse.dir is not set, but hive.metastore.warehouse.dir is set. Setting spark.sql.warehouse.dir to the value of hive.metastore.warehouse.dir (’/apps/hive/warehouse’).

21/06/25 10:09:07 INFO internal.SharedState: Warehouse path is ‘/apps/hive/warehouse’.

21/06/25 10:09:07 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@47cbdc0b{/SQL,null,AVAILABLE,@Spark}

21/06/25 10:09:07 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@27fd9857{/SQL/json,null,AVAILABLE,@Spark}

21/06/25 10:09:07 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@55b9087{/SQL/execution,null,AVAILABLE,@Spark}

21/06/25 10:09:07 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@71e607d9{/SQL/execution/json,null,AVAILABLE,@Spark}

21/06/25 10:09:07 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@55290319{/static/sql,null,AVAILABLE,@Spark}

21/06/25 10:09:07 INFO state.StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

21/06/25 10:09:08 INFO spark.SparkContext: Starting job: reduce at /usr/spark2.4.3/examples/src/main/python/pi.py:44

21/06/25 10:09:08 INFO scheduler.DAGScheduler: Got job 0 (reduce at /usr/spark2.4.3/examples/src/main/python/pi.py:44) with 2 output partitions

21/06/25 10:09:08 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (reduce at /usr/spark2.4.3/examples/src/main/python/pi.py:44)

21/06/25 10:09:08 INFO scheduler.DAGScheduler: Parents of final stage: List()

21/06/25 10:09:08 INFO scheduler.DAGScheduler: Missing parents: List()

21/06/25 10:09:08 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (PythonRDD[1] at reduce at /usr/spark2.4.3/examples/src/main/python/pi.py:44), which has no missing parents

21/06/25 10:09:08 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 6.1 KB, free 93.3 MB)

21/06/25 10:09:08 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 4.2 KB, free 93.3 MB)

21/06/25 10:09:08 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on cxln4.c.thelab-240901.internal:45896 (size: 4.2 KB, free: 93.3 MB)

21/06/25 10:09:08 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1161

21/06/25 10:09:08 INFO scheduler.DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (PythonRDD[1] at reduce at /usr/spark2.4.3/examples/src/main/python/pi.py:44) (first 15 tasks are for partitions Vector(0, 1))

21/06/25 10:09:08 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

21/06/25 10:09:08 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7852 bytes)

21/06/25 10:09:08 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL, 7852 bytes)

21/06/25 10:09:08 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0)

21/06/25 10:09:08 INFO executor.Executor: Running task 1.0 in stage 0.0 (TID 1)

21/06/25 10:09:08 ERROR executor.Executor: Exception in task 1.0 in stage 0.0 (TID 1)

org.apache.spark.SparkException:

Error from python worker:

/usr/local/anaconda/bin/python: Error while finding module specification for ‘pyspark.daemon’ (AttributeError: module ‘pyspark’ has no attribute ‘path’)

PYTHONPATH was:

/usr/spark2.4.3/python/lib/pyspark.zip:/usr/spark2.4.3/python/lib/py4j-0.10.7-src.zip:/usr/spark2.4.3/jars/spark-core_2.11-2.4.3.jar:/usr/spark2.4.3/python/:/python/:

org.apache.spark.SparkException: No port number in pyspark.daemon’s stdout

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:204)

at org.apache.spark.api.python.PythonWorkerFactory.createThroughDaemon(PythonWorkerFactory.scala:122)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:95)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:117)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:108)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

21/06/25 10:09:08 WARN scheduler.TaskSetManager: Lost task 1.0 in stage 0.0 (TID 1, localhost, executor driver): org.apache.spark.SparkException:

Error from python worker:

/usr/local/anaconda/bin/python: Error while finding module specification for ‘pyspark.daemon’ (AttributeError: module ‘pyspark’ has no attribute ‘path’)

PYTHONPATH was:

/usr/spark2.4.3/python/lib/pyspark.zip:/usr/spark2.4.3/python/lib/py4j-0.10.7-src.zip:/usr/spark2.4.3/jars/spark-core_2.11-2.4.3.jar:/usr/spark2.4.3/python/:/python/:

org.apache.spark.SparkException: No port number in pyspark.daemon’s stdout

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:204)

at org.apache.spark.api.python.PythonWorkerFactory.createThroughDaemon(PythonWorkerFactory.scala:122)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:95)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:117)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:108)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

21/06/25 10:09:08 ERROR scheduler.TaskSetManager: Task 1 in stage 0.0 failed 1 times; aborting job

21/06/25 10:09:08 INFO scheduler.TaskSchedulerImpl: Cancelling stage 0

21/06/25 10:09:08 INFO scheduler.TaskSchedulerImpl: Killing all running tasks in stage 0: Stage cancelled

21/06/25 10:09:08 INFO scheduler.TaskSchedulerImpl: Stage 0 was cancelled

21/06/25 10:09:08 INFO executor.Executor: Executor is trying to kill task 0.0 in stage 0.0 (TID 0), reason: Stage cancelled

21/06/25 10:09:08 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at /usr/spark2.4.3/examples/src/main/python/pi.py:44) failed in 0.478 s due to Job aborted due to stage failure: Task 1 in stage 0.0 failed 1 times, most recent failure: Lost task 1.0 in stage 0.0 (TID 1, localhost, executor driver): org.apache.spark.SparkException:

Error from python worker:

/usr/local/anaconda/bin/python: Error while finding module specification for ‘pyspark.daemon’ (AttributeError: module ‘pyspark’ has no attribute ‘path’)

PYTHONPATH was:

/usr/spark2.4.3/python/lib/pyspark.zip:/usr/spark2.4.3/python/lib/py4j-0.10.7-src.zip:/usr/spark2.4.3/jars/spark-core_2.11-2.4.3.jar:/usr/spark2.4.3/python/:/python/:

org.apache.spark.SparkException: No port number in pyspark.daemon’s stdout

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:204)

at org.apache.spark.api.python.PythonWorkerFactory.createThroughDaemon(PythonWorkerFactory.scala:122)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:95)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:117)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:108)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Driver stacktrace:

21/06/25 10:09:08 INFO executor.Executor: Executor interrupted and killed task 0.0 in stage 0.0 (TID 0), reason: Stage cancelled

21/06/25 10:09:08 WARN scheduler.TaskSetManager: Lost task 0.0 in stage 0.0 (TID 0, localhost, executor driver): TaskKilled (Stage cancelled)

21/06/25 10:09:08 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

21/06/25 10:09:08 INFO scheduler.DAGScheduler: Job 0 failed: reduce at /usr/spark2.4.3/examples/src/main/python/pi.py:44, took 0.566778 s

Traceback (most recent call last):

File “/usr/spark2.4.3/examples/src/main/python/pi.py”, line 44, in

count = spark.sparkContext.parallelize(range(1, n + 1), partitions).map(f).reduce(add)

File “/usr/spark2.4.3/python/lib/pyspark.zip/pyspark/rdd.py”, line 844, in reduce

File “/usr/spark2.4.3/python/lib/pyspark.zip/pyspark/rdd.py”, line 816, in collect

File “/usr/spark2.4.3/python/lib/py4j-0.10.7-src.zip/py4j/java_gateway.py”, line 1257, in call

File “/usr/spark2.4.3/python/lib/pyspark.zip/pyspark/sql/utils.py”, line 63, in deco

File “/usr/spark2.4.3/python/lib/py4j-0.10.7-src.zip/py4j/protocol.py”, line 328, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling z:org.apache.spark.api.python.PythonRDD.collectAndServe.

: org.apache.spark.SparkException: Job aborted due to stage failure: Task 1 in stage 0.0 failed 1 times, most recent failure: Lost task 1.0 in stage 0.0 (TID 1, localhost, executor driver): org.apache.spark.SparkException:

Error from python worker:

/usr/local/anaconda/bin/python: Error while finding module specification for ‘pyspark.daemon’ (AttributeError: module ‘pyspark’ has no attribute ‘path’)

PYTHONPATH was:

/usr/spark2.4.3/python/lib/pyspark.zip:/usr/spark2.4.3/python/lib/py4j-0.10.7-src.zip:/usr/spark2.4.3/jars/spark-core_2.11-2.4.3.jar:/usr/spark2.4.3/python/:/python/:

org.apache.spark.SparkException: No port number in pyspark.daemon’s stdout

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:204)

at org.apache.spark.api.python.PythonWorkerFactory.createThroughDaemon(PythonWorkerFactory.scala:122)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:95)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:117)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:108)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1889)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1877)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1876)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1876)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at scala.Option.foreach(Option.scala:257)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2110)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2059)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2048)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:737)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2082)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126)

at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.collect(RDD.scala:944)

at org.apache.spark.api.python.PythonRDD$.collectAndServe(PythonRDD.scala:166)

at org.apache.spark.api.python.PythonRDD.collectAndServe(PythonRDD.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.spark.SparkException:

Error from python worker:

/usr/local/anaconda/bin/python: Error while finding module specification for ‘pyspark.daemon’ (AttributeError: module ‘pyspark’ has no attribute ‘path’)

PYTHONPATH was:

/usr/spark2.4.3/python/lib/pyspark.zip:/usr/spark2.4.3/python/lib/py4j-0.10.7-src.zip:/usr/spark2.4.3/jars/spark-core_2.11-2.4.3.jar:/usr/spark2.4.3/python/:/python/:

org.apache.spark.SparkException: No port number in pyspark.daemon’s stdout

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:204)

at org.apache.spark.api.python.PythonWorkerFactory.createThroughDaemon(PythonWorkerFactory.scala:122)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:95)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:117)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:108)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

… 1 more

21/06/25 10:09:08 INFO storage.BlockManagerInfo: Removed broadcast_0_piece0 on cxln4.c.thelab-240901.internal:45896 in memory (size: 4.2 KB, free: 93.3 MB)

21/06/25 10:09:08 INFO spark.SparkContext: Invoking stop() from shutdown hook

21/06/25 10:09:08 INFO server.AbstractConnector: Stopped Spark@63bacafd{HTTP/1.1,[http/1.1]}{0.0.0.0:4041}

21/06/25 10:09:08 INFO ui.SparkUI: Stopped Spark web UI at http://cxln4.c.thelab-240901.internal:4041

21/06/25 10:09:08 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

21/06/25 10:09:08 INFO memory.MemoryStore: MemoryStore cleared

21/06/25 10:09:08 INFO storage.BlockManager: BlockManager stopped

21/06/25 10:09:08 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

21/06/25 10:09:08 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

21/06/25 10:09:08 INFO spark.SparkContext: Successfully stopped SparkContext

21/06/25 10:09:08 INFO util.ShutdownHookManager: Shutdown hook called

21/06/25 10:09:08 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-88838af5-a836-434f-9588-1585c56a5c4b

21/06/25 10:09:08 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-8c8f49c2-8800-4361-88c4-11a8acb785ab

21/06/25 10:09:08 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-8c8f49c2-8800-4361-88c4-11a8acb785ab/pyspark-69fcb3f6-6313-4f5a-b17f-3f2401d50a03`

I tried the same code on notebook. throws the same error

can I have an update on this

I was able to run the example in the following way without setting any environment variables:

/usr/spark2.4.3/bin/run-example SparkPi 10

....

21/06/29 05:25:11 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

21/06/29 05:25:11 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 0.828 s

21/06/29 05:25:11 INFO scheduler.DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 0.912119 s

*Pi is roughly 3.147039147039147*

21/06/29 05:25:11 INFO server.AbstractConnector: Stopped Spark@759d81f3{HTTP/1.1,[http/1.1]}{0.0.0.0:4048}

21/06/29 05:25:11 INFO ui.SparkUI: Stopped Spark web UI at http://cxln4.c.thelab-240901.internal:4048

21/06/29 05:25:11 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

21/06/29 05:25:11 INFO memory.MemoryStore: MemoryStore cleared

21/06/29 05:25:11 INFO storage.BlockManager: BlockManager stopped

....can you please run this in YARN mode. it is failing for me

can i have a response please

Hi Suresh,

The default installation of spark is able to work well with yarn. The following works fine:

run-example --master yarn SparkPi 10

Also, with out yarn all version seem to run fine:

/usr/spark2.4.3/bin/run-example SparkPi 10run-example SparkPi 10

but the non-default installations do not seem to work with YARN:

/usr/spark2.4.3/bin/run-example --master yarn SparkPi 10

It throws the exception about missing class:

java.lang.NoClassDefFoundError: com/sun/jersey/api/client/config/ClientConfig

I am trying to figure out how YARN can work with other versions of spark.

Hi Suresh,

The main reason of this error is because the run-example depends upon certain version of jersey and there is a version conflict.

In your projects, however, this error should not occur because you can bundle your dependencies in POM file.

You can take a look at how to write spark application here: