Is it recommended to use Hue?

Thank you for this question. Let me describe what Hue is before answering your question.

What is Hue?

Hue is a product from Cloudera. It is basically a user interface to interact with various components (such as HDFS, Hive, Oozie etc) from the Hadoop ecosystem.

Should we learn to use Hue?

The quick answer is No. My reason is as follows:

Make yourself comfortable with console

With Hue UI available, we end up not practising the commands from Console. The commands from the console are the most powerful way to interact with various components. Powerful because it is the first step in automation.

Not every organization provides Hue

When you are working with a company in a big data project the chance of a company having Hue is very low because

- The Hue opens a web interface and therefore increases the attack surface.

- Hue consumes memory and other resources

- Making Hue work with various components is a big overhead.

So, organizations prefer to provide shell access instead of providing UI.

Not every component can be interacted with using the Hue

Since the Apache Hadoop and Spark’s ecosystem is very dynamic, it takes a while for the UI’s to be developed in Hue and made available. Sometimes the features in Hue are not stable enough.

So, it becomes unreliable for work in enterprises.

So, our request to all to use the console (aka ssh, bash or terminal) instead of Hue. The initial learning curve of the console is a bit steep but using the console is more practical, productive and satisfying.

I agree with you keeping in mind of environment near to production. Here we use cloudxlab to learn, for example if we want to upload a file from our local system to HDFS what is the option?

Currently, We dont have hue, we cannot connect through winscp.

leaving production, for learning if we want to have handson based on the datasets in our local, how can we have the data in HDFS?

Hi. Yogendra.

For copying a folder from local to hdfs, you can use

-

hadoop fs -copyFromLocal Your_cloudxlab_localpath hdfspath

or -

hadoop fs -copyFromLocal Your_cloudxlab_localpath

or -

hadoop fs -put Your_cloudxlab_localpath Your_cloudxlab_hdfspath

or - hadoop fs -put Your_cloudxlab_localpath

All the hacks are already told in Lectures.

Console is the best! to learn and do Handson!

All the best!

the local mean the laptop. I will provide in detail. I have sample.txt with 2 MB size, which i need to analyse in hive. So what I would do is, i would use hue to upload sample.txt from my local(laptop or desktop or whatever in which we are working) to hdfs. Hope this is clear.

As mentioned

- Your_cloudxlab_localpath means Your Cloudxlab home directory path. like /home/Your_cloudxlab_username

- hdfspath means the hdfs path , starts with /data in CloudXLab.

For confirmation use this.

-

Check the path for both local and hdfs.

-

Type ls is your console. Check which folder you want to copy

-

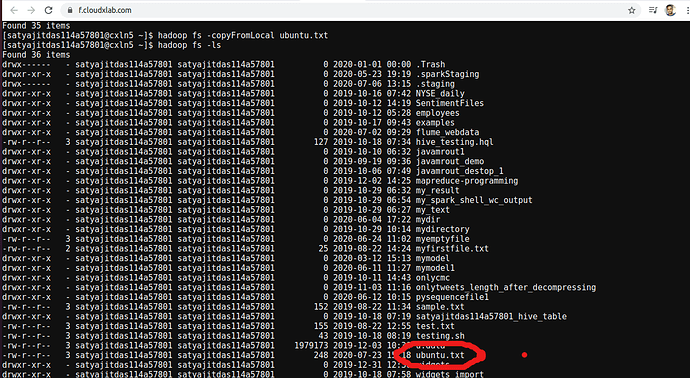

write the command in console hadoop fs -copyFromLocal Your_cloudxlab_localpath Your_hdfspath

-

Check if the folder is copied or not by hadoop fs -ls in console you must see the copied directory.

If you want to copy the folder from your local laptop.

Then first you need to copy it from your local to the local user of cloudxlab.

use scp for this

KIndly refer below link for details.

https://cloudxlab.com/faq/20/how-should-i-upload-files-from-my-local-machine-to-cloudxlab

Note :- Everything is told in the lectures in crystal clear manner!

All the best!

It seems that you are using windows,

I have already told that

If you want to copy the folder from your local laptop to CloudxLab local.

Then first you need to copy it from your local to the local user of cloudxlab.

use Winscp client for this.

Hope this clears your doubt with respect to Hue.

Refer this video :-

I have completely read your post one by one if you edit it, then information loss will be there.

May I know if you have tried it using winscp to move the files?

What issue you are getting while transferring it?

Let me know on this and solve this then you can test my knowledge.

And I am not here do any type of communication! I am here to solve!

What the issue you are getting tell then I will try to resolve. Is this a Host error or server connection etc?

The below is the FAQ’s

https://winscp.net/eng/docs/message_connection_refused

https://winscp.net/eng/docs/faq_connection_refused

(post withdrawn by author, will be automatically deleted in 24 hours unless flagged)

Hey Satyajit,

I think Yogendra understand this part. His question is with respect to the copying the file from laptop to the CloudxLab linux file system.

He is not able to use winscp in order to upload data to linux home directory which can be further uploaded to HDFS using file system.

I would suggest to please test if you are able to copy the files using WinSCP or FileZilla or any other scp client to cloudxlab server.

It works pretty well for me using the command line scp from Macbook air.

Hi Sandeep Giri,

Thanks a lot for easing this post. This was going to another way which i wanted to stop somehow with the viable solution.

You are right, my response was to mean as both hue and winscp is not working, what is the other way. However I found it myself, i just uploaded the required file to hdfs by manually creating a file in cloudxlab local system and copied the contents and then load to hdfs. This served the purpose for me.

Thank you again.

Hey Yogendra,

I think it is a wrong choice of words from Satyajit to use “addiction” instead of “doubt”. I have corrected that.

Let me try to express it again. In order to upload the files to HDFS, now it is two step process without Hue.

First you have to upload files from you laptop/desktop to your Linux home directory at CloudxLab. This can be done using WinSCP as mentioned above in a video link posted by Satyajit.

Then, upload it to HDFS using “hadoop fs -put …” or “hadoop fs -copyFromLocal …”

I hope I was able to explain.

Hi Sandeep,

Thankyou Sandeep. It is fine now as I could able to upload the file by creating new file, copying the contents and the load to hdfs.

Hi, Sandeep Sir.

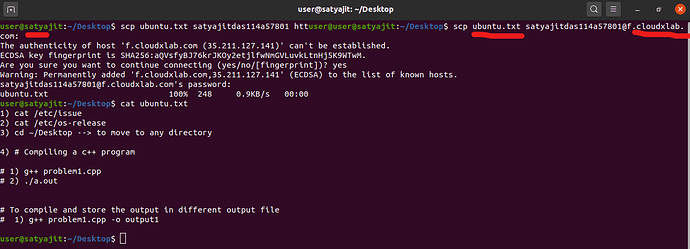

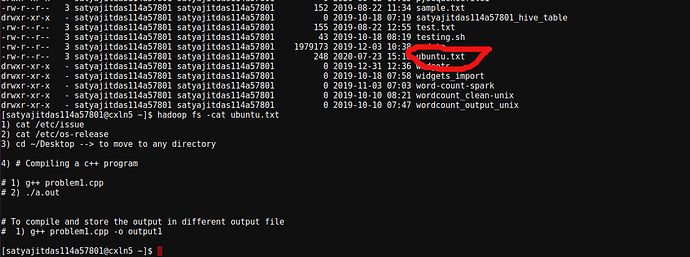

I have tried and able to do transfer the files as I mentioned previously.

I am using ubuntu so used scp protocol client.

-

The File named ubuntu.txt is at my Local Laptop transferring through scp protocol client !

using scp ubuntu.txt satyajitdas114a57801@f.cloudxlab.com:

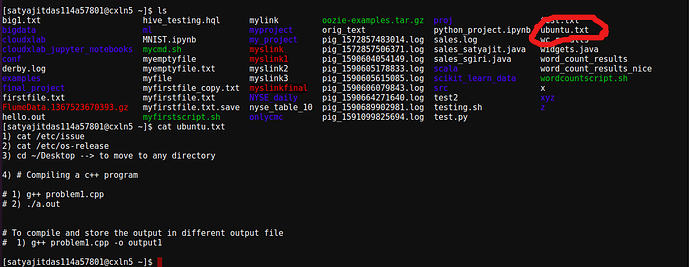

- The File ubuntu.txt is at my CloudxLab local user linux path.

- The File name Ubuntu.txt has been transferred via

hadoop fs -copyFromLocal ubuntu.txt Hadoop command.

- Check the content using the hadoop command.

hadoop fs -cat ubuntu.txt

Now since the files are in Hadoop clusters we can run any hadoop or Hive commands on the file seamlessly using terminal.

Thank you.

Good. If someone has windows, it would be good to test winscp too.

I am not able to login in Hue.

with id sunny040419922779

I understand the explanation. However videos explains the steps using Hue. If the intent is not to provide Hue, why use unrelated videos? isnt it misleading as we are trying to follow video and do hands on.

Sir, If Hue is not important to learn then why in video tutorials you are teaching about it? One side in videos, a lot of material about Hue and another side Hue is not available for practice or hands on experience. I do not know what is the issue but I am sorry to say it is not fair.