org.apache.hadoop.ipc.RemoteException: The DiskSpace quota of /user/bdm05848695 is exceeded: quota = 4294967296 B = 4 GB but diskspace consumed = 4429189317 B = 4.13 GB

I tried running,

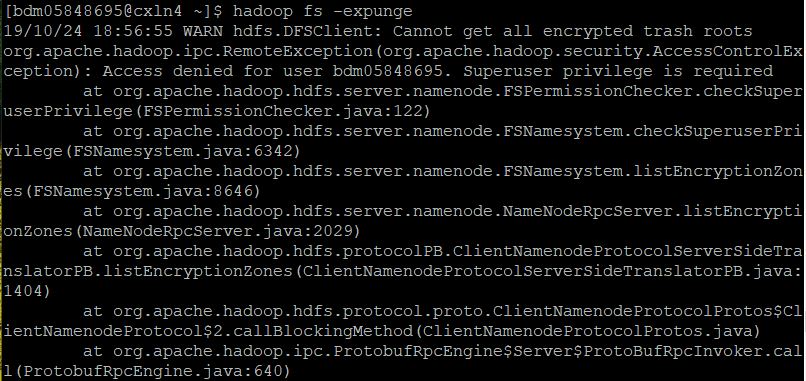

hadoop fs -expunge

I am getting " Superuser privilege is required"

Kindly help.

Also, is it important to maintain files in the hadoop user directory, will that be considered for certification ?