Hi Team,

I am working on Pyspark integration with Hbase. I ran my script as below.

I started spark session as below.

pyspark --jars /usr/hdp/current/shc/shc-core-1.1.0.2.6.5.0-292.jar,/usr/hdp/current/hbase-client/lib/htrace-core-3.1.0-incubating.jar,/usr/hdp/current/hbase-client/lib/hbase-client.jar,/usr/hdp/current/hbase-client/lib/hbase-common.jar,/usr/hdp/current/hbase-client/lib/hbase-server.jar,/usr/hdp/current/hbase-client/lib/guava-12.0.1.jar,/usr/hdp/current/hbase-client/lib/hbase-protocol.jar,/usr/hdp/current/hbase-client/lib/htrace-core-3.1.0-incubating.jar --files /usr/hdp/current/hbase-client/conf/hbase-site.xml

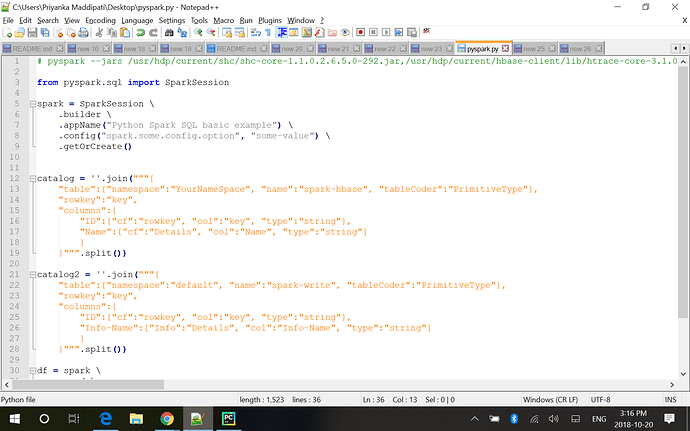

The following is the code I ran.

from pyspark.sql import SparkSession

spark = SparkSession

.builder

.appName(“Python Spark SQL basic example”)

.config(“spark.some.config.option”, “some-value”)

.getOrCreate()

catalog = ‘’.join("""{

“table”:{“namespace”:“YourNameSpace”, “name”:“spark-hbase”, “tableCoder”:“PrimitiveType”},

“rowkey”:“key”,

“columns”:{

“ID”:{“cf”:“rowkey”, “col”:“key”, “type”:“string”},

“Name”:{“cf”:“Details”, “col”:“Name”, “type”:“string”}

}

}""".split())

catalog2 = ‘’.join("""{

“table”:{“namespace”:“default”, “name”:“spark-write”, “tableCoder”:“PrimitiveType”},

“rowkey”:“key”,

“columns”:{

“ID”:{“cf”:“rowkey”, “col”:“key”, “type”:“string”},

“Info-Name”:{“Info”:“Details”, “col”:“Info-Name”, “type”:“string”}

}

}""".split())

df = spark

.read

.options(catalog=catalog)

.format(‘org.apache.spark.sql.execution.datasources.hbase’)

.load()

df.show()

End of script

The below is the error I got.

Traceback (most recent call last):

File “”, line 1, in

File “/usr/hdp/current/spark2-client/python/pyspark/sql/dataframe.py”, line 350, in show

print(self._jdf.showString(n, 20, vertical))

File “/usr/hdp/current/spark2-client/python/lib/py4j-0.10.6-src.zip/py4j/java_gateway.py”, line 1160, in call

File “/usr/hdp/current/spark2-client/python/pyspark/sql/utils.py”, line 63, in deco

return f(*a, kw)

File “/usr/hdp/current/spark2-client/python/lib/py4j-0.10.6-src.zip/py4j/protocol.py”, line 320, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling o59.showString.

: org.apache.hadoop.hbase.client.RetriesExhaustedException: Can’t get the location for replica 0

** at org.apache.hadoop.hbase.client.RpcRetryingCallerWithReadReplicas.getRegionLocations(RpcRetryingCallerWithReadReplicas.java

:354)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:159)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:61)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithoutRetries(RpcRetryingCaller.java:211)

at org.apache.hadoop.hbase.client.ClientScanner.call(ClientScanner.java:327)

at org.apache.hadoop.hbase.client.ClientScanner.nextScanner(ClientScanner.java:302)

at org.apache.hadoop.hbase.client.ClientScanner.initializeScannerInConstruction(ClientScanner.java:167)

at org.apache.hadoop.hbase.client.ClientScanner.(ClientScanner.java:162)

at org.apache.hadoop.hbase.client.HTable.getScanner(HTable.java:799)

at org.apache.hadoop.hbase.client.MetaScanner.metaScan(MetaScanner.java:193)

at org.apache.hadoop.hbase.client.MetaScanner.metaScan(MetaScanner.java:89)

at org.apache.hadoop.hbase.client.MetaScanner.listTableRegionLocations(MetaScanner.java:343)

at org.apache.hadoop.hbase.client.HRegionLocator.listRegionLocations(HRegionLocator.java:146)

at org.apache.hadoop.hbase.client.HRegionLocator.getStartEndKeys(HRegionLocator.java:122)

at org.apache.spark.sql.execution.datasources.hbase.RegionResource$$anonfun$1.apply(HBaseResources.scala:112)

at org.apache.spark.sql.execution.datasources.hbase.RegionResource$$anonfun$1.apply(HBaseResources.scala:111)

at org.apache.spark.sql.execution.datasources.hbase.ReferencedResource$class.releaseOnException(HBaseResources.scala:80)

at org.apache.spark.sql.execution.datasources.hbase.RegionResource.releaseOnException(HBaseResources.scala:91)

at org.apache.spark.sql.execution.datasources.hbase.RegionResource.(HBaseResources.scala:111)

at org.apache.spark.sql.execution.datasources.hbase.HBaseTableScanRDD.getPartitions(HBaseTableScan.scala:64)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.sql.execution.SparkPlan.executeTake(SparkPlan.scala:340)

at org.apache.spark.sql.execution.CollectLimitExec.executeCollect(limit.scala:38)

at org.apache.spark.sql.Dataset.org$apache$spark$sql$Dataset$$collectFromPlan(Dataset.scala:3272)

at org.apache.spark.sql.Dataset$$anonfun$head$1.apply(Dataset.scala:2484)

at org.apache.spark.sql.Dataset$$anonfun$head$1.apply(Dataset.scala:2484)

at org.apache.spark.sql.Dataset$$anonfun$52.apply(Dataset.scala:3253)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:77)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3252)

at org.apache.spark.sql.Dataset.head(Dataset.scala:2484)

at org.apache.spark.sql.Dataset.take(Dataset.scala:2698)

at org.apache.spark.sql.Dataset.showString(Dataset.scala:254)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:214)

at java.lang.Thread.run(Thread.java:745)

I already did some research on Google and nothing worked for me.Looks like not able to connect to Hbase/Zookeeper. Please provide me some suggestions.

I am also attaching script which I executed for your reference.

Thanks,

Mukkanti