cannot able to import the data

Hi,

From where you are not able to import the data?

Which exercise you are getting problem?

What is the error? screenshots please.

All the above information helps me to better diagnose your problem.

All the best!

I have this same error today (yesterday it was fine).

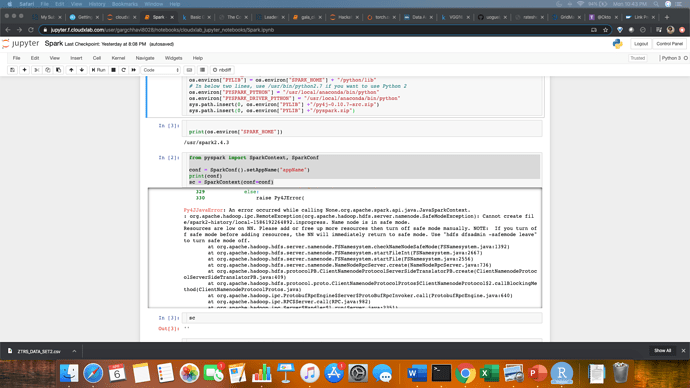

This is when setting sparkcontext in jupyter notebook, Following is the code:

from pyspark import SparkContext, SparkConf

conf = SparkConf().setAppName(“appName”)

sc = SparkContext(conf=conf) # this line is throwing an error

An error occurred while calling None.org.apache.spark.api.java.JavaSparkContext.

: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create file/spark2-history/local-1586192264892.inprogress. Name node is in safe mode.

@satyajit_das, thanks for looking into it,

name node comes out from safe mode. now I can able ran Hadoop cp command.

@satyajit_das, again faing same issue

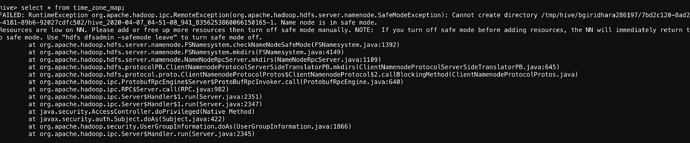

hive> select * from time_zone_map;

FAILED: RuntimeException org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create directory /tmp/hive/bgiridhara286197/7bd2c120-8ad2

-4181-89b6-92027cdfc502/hive_2020-04-07_04-51-08_941_8356253860066150165-1. Name node is in safe mode.

Resources are low on NN. Please add or free up more resources then turn off safe mode manually. NOTE: If you turn off safe mode before adding resources, the NN will immediately return t

o safe mode. Use “hdfs dfsadmin -safemode leave” to turn safe mode off.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1392)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4149)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:1109)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:645)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:640)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2351)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2347)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1866)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2345)