Hi Team,

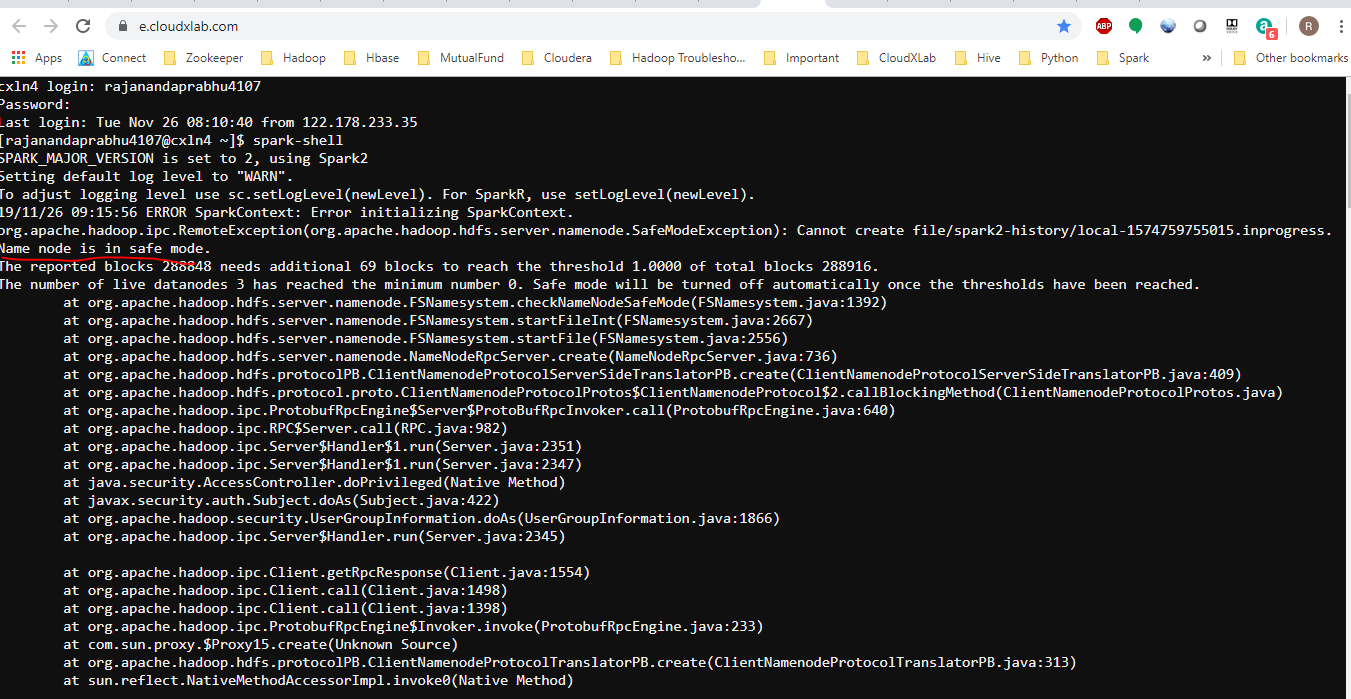

I am not able to connect to Hive and Spark. It is giving error. Here is the error which i get while connecting to Hive.

[rajanandaprabhu4107@cxln4 scripts]$ hive

log4j:WARN No such property [maxFileSize] in org.apache.log4j.DailyRollingFileAppender.

Logging initialized using configuration in file:/etc/hive/2.6.2.0-205/0/hive-log4j.properti es

Exception in thread “main” java.lang.RuntimeException: java.net.ConnectException: Call From cxln4.c.thelab-240901.internal/10.142.1.4 to cxln1.c.thelab-240901.internal:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:547)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:681)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:625)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.jav a:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:233)

at org.apache.hadoop.util.RunJar.main(RunJar.java:148)

Caused by: java.net.ConnectException: Call From cxln4.c.thelab-240901.internal/10.142.1.4 t o cxln1.c.thelab-240901.internal:8020 failed on connection exception: java.net.ConnectExcep tion: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRe fused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorI mpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorA ccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:801)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:732)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1558)

at org.apache.hadoop.ipc.Client.call(Client.java:1498)

at org.apache.hadoop.ipc.Client.call(Client.java:1398)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:23 3)

at com.sun.proxy.$Proxy12.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo (ClientNamenodeProtocolTranslatorPB.java:823)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.jav a:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHa ndler.java:291)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler. java:203)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler. java:185)

at com.sun.proxy.$Proxy13.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2165)

at org.apache.hadoop.hdfs.DistributedFileSystem$26.doCall(DistributedFileSystem.jav a:1442)

at org.apache.hadoop.hdfs.DistributedFileSystem$26.doCall(DistributedFileSystem.jav a:1438)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java: 81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem .java:1438)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1447)

at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.ja va:632)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.ja va:580)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:533)

… 8 more

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:495)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:650)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:745)

at org.apache.hadoop.ipc.Client$Connection.access$3200(Client.java:397)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1620)

at org.apache.hadoop.ipc.Client.call(Client.java:1451)

… 29 more

Kindly check the same and do the needful ASAP

Thanks & Regards

Raj