Hi @sgiri

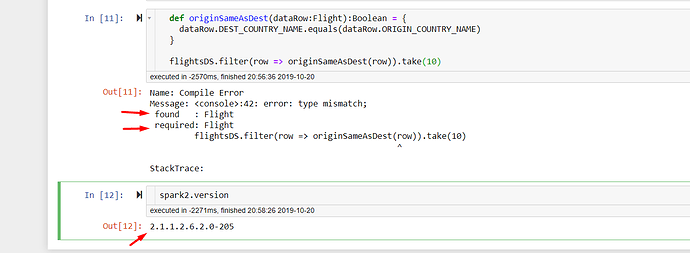

Jupyter notebook with the Scala Kernel runs very old version of spark (v2.1.1). This is causing most of the latest Dataframe and Dataset operations that were introduced after v2.1 to fail .

Is it possible to update the Spark version used by the Jupyter notebook to 2.3 or 2.4 ?