I have created a table ztutorials_tbl and populated it with data.

I then tried to import using the following sqoop command

sqoop import --connect “jdbc:mysql://ip-172-31-13-154:3306/retail_db” --table ztutorials_tbl --username sqoopuser -P

After entering the password , the import is stuck at this point

18/05/21 10:28:54 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/05/21 10:28:54 INFO tool.CodeGenTool: Beginning code generation

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hdp/2.3.4.0-3485/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/2.3.4.0-3485/zookeeper/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/2.3.4.0-3485/accumulo/lib/slf4j-log4j12.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

18/05/21 10:28:58 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM ztutorials_tbl AS t LIMIT 1

18/05/21 10:28:58 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM ztutorials_tbl AS t LIMIT 1

18/05/21 10:28:58 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/hdp/2.3.4.0-3485/hadoop-mapreduce

Note: /tmp/sqoop-uutkarshsingh7351/compile/d74f816677a8948eb0795827293b6945/ztutorials_tbl.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/05/21 10:29:11 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-uutkarshsingh7351/compile/d74f816677a8948eb0795827293b6945/ztutorials_tbl.jar

18/05/21 10:29:11 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/05/21 10:29:11 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/05/21 10:29:11 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/05/21 10:29:11 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/05/21 10:29:11 INFO mapreduce.ImportJobBase: Beginning import of ztutorials_tbl

18/05/21 10:29:22 INFO impl.TimelineClientImpl: Timeline service address: http://ip-172-31-13-154.ec2.internal:8188/ws/v1/timeline/

18/05/21 10:29:23 INFO client.RMProxy: Connecting to ResourceManager at ip-172-31-53-48.ec2.internal/172.31.53.48:8050

18/05/21 10:29:38 INFO db.DBInputFormat: Using read commited transaction isolation

18/05/21 10:29:38 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(tutorial_id), MAX(tutorial_id) FROM ztutorials_tbl

18/05/21 10:29:39 INFO mapreduce.JobSubmitter: number of splits:3

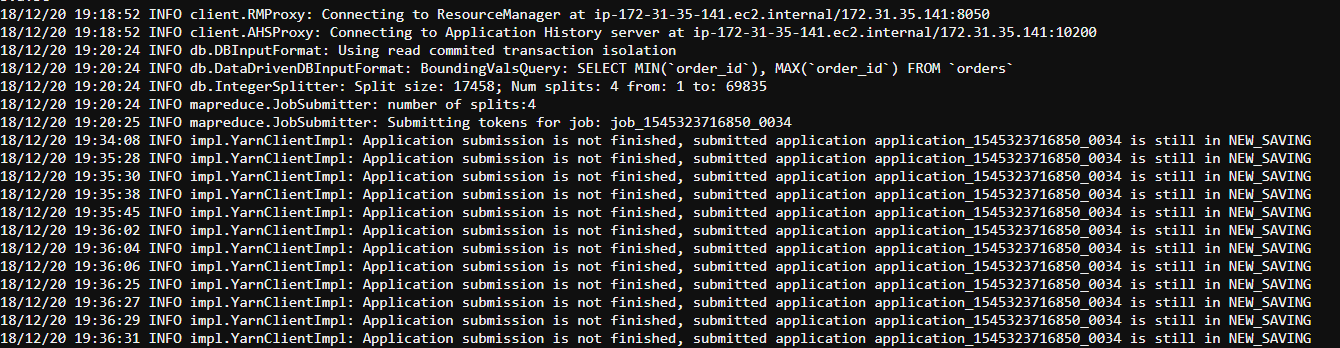

18/05/21 10:40:18 INFO impl.YarnClientImpl: Application submission is not finished, submitted application application_1524162637175_28397 is still in NEW_SAVING

The console continuously emits this information without any progress. Anyone faced this issue ?