Hi ,

I am facing this problem .I had faced this problem earlier as well but

I am not sure why this question was not answered.

When using the latest Spark-shell version 2.2.1 the system throws these errors

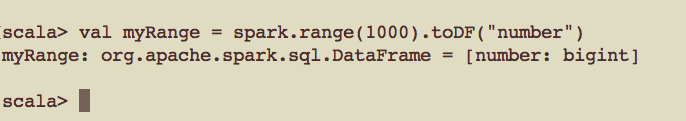

val myRange = spark.range(1000).toDF(“number”)

java.lang.IllegalArgumentException: Error while instantiating ‘org.apache.spark.sql.hive.HiveSessionStateBuilder’:

** LOTS OF ERROR**

Please make sure that jars for your version of hive and hadoop are included in the paths passed to spark.sql.hive.metastore.jars.

Caused by: java.lang.reflect.InvocationTargetException: java.lang.NoClassDefFoundError: org/apache/tez/dag/api/SessionNotRunning

** LOTS OF ERROR**

Caused by: java.lang.NoClassDefFoundError: org/apache/tez/dag/api/SessionNotRunning

** LOTS OF ERROR**

Caused by: java.lang.ClassNotFoundException: org.apache.tez.dag.api.SessionNotRunning

** LOTS OF ERROR**

Please help in answering this question… @abhinav @sandeepgiri

The error is also present when using the pyspark.

>>> myRange = spark.range(1000).toDF(“number”)

Traceback (most recent call last):

** File “”, line 1, in **

NameError: name ‘spark’ is not defined

But I am not sure about the version of the pyspark that has been used in this.

I am executing the examples from the book Spark - Definitive guide.

Thanks