I followed both the below steps, but unfortunately I am still getting the disk space quota exceeded error

- hadoop fs -setrep -w 1 /user/gladiator2012795723

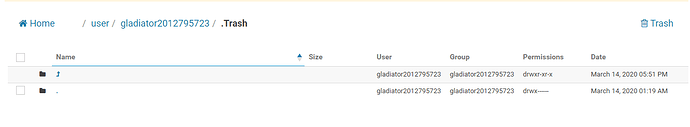

- Explicitly hard deleted lot of folders / files and after doing that size occupied by my folder has reduced from 421 Mb to 65 Mb (PFB screen shot for the same). Command executed to get the size - “hadoop fs -du -s -h /user/gladiator2012795723”

Kindly help to get this resolved ASAP