I am using structured streaming to read data from a socket do a simple word count and then writing the output of the word count back to a hive table. The code that I am using is as below:-

First I have already created a managed table in hive as below:-

create table wordcounts_table(words String, count int) stored as parquet;

Then I am writing the below code in spark-shell:-

val lines = spark.readStream.format(“socket”).option(“host”, “localhost”).option(“port”, 9999).load().as[String]

val words = lines.flatMap(_.split(" "))

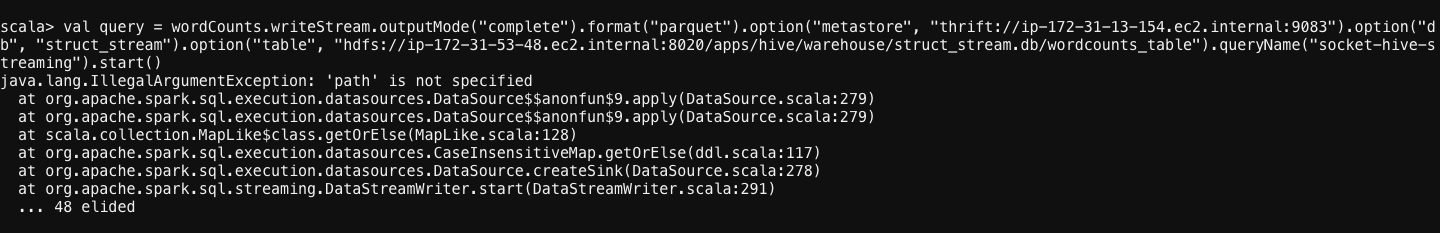

val query = wordCounts.writeStream.outputMode(“complete”).format(“parquet”).option(“metastore”, “thrift://ip-172-31-13-154.ec2.internal:9083”).option(“db”, “struct_stream”).option(“table”, “hdfs://ip-172-31-53-48.ec2.internal:8020/apps/hive/warehouse/struct_stream.db/wordcounts_table”).queryName(“socket-hive-streaming”).start()

When I execute the last line I get the below exception:-

java.lang.IllegalArgumentException: ‘path’ is not specified

The detailed screenshot of the exception I have attached. I have tried a multiple no. of things but still I am not able to understand what or how to resolve this issue.