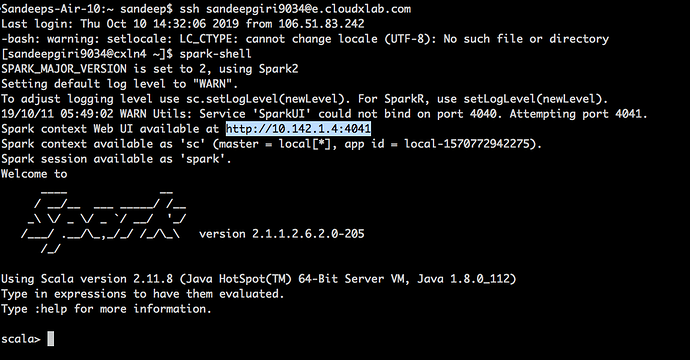

when I start spark shell using command

spark-shell

I see as below

SPARK_MAJOR_VERSION is set to 2, using Spark2

Setting default log level to “WARN”.

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

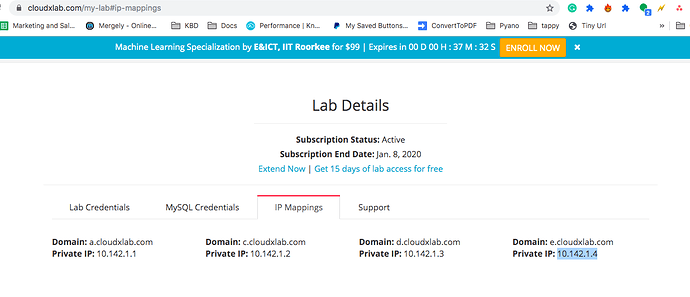

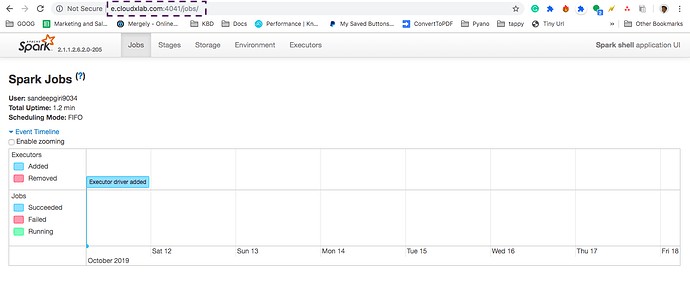

Spark context Web UI available at http://10.142.1.4:4040

Spark context available as ‘sc’ (master = local[*], app id = local-1570732770075).

Spark session available as ‘spark’.

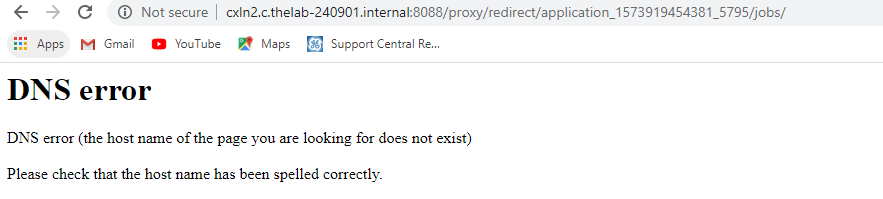

but the Spark UI url is not accessible. I have tried 4-5 times I got different port numbers also, but still could not connect.

Can you please explain this ?