Hello,

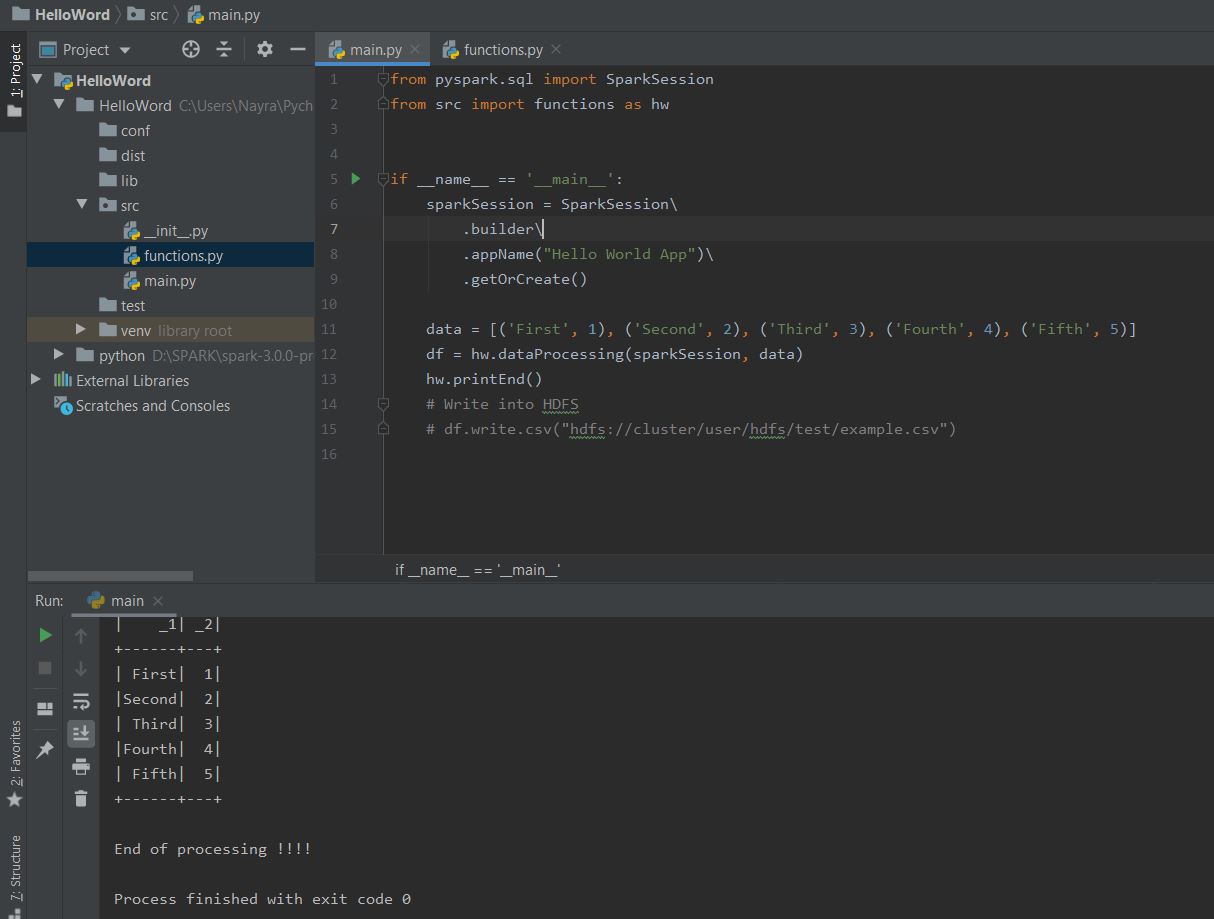

I have developed my pyspark project with two modules. See the attached screenshot for the project structue.

My question is how I deploy it with spark-submit and indicate the entry point.

thank you in advance

Hi,

I just answered this in another post. Please check this out.

What all needs to be included using SparkSession for spark-submit a .py file.

I have included the following

import os

import sys

os.environ[“SPARK_HOME”] = “/usr/spark2.3/”

os.environ[“PYLIB”] = os.environ[“SPARK_HOME”] + “/python/lib”

os.environ[“PYSPARK_PYTHON”] = “/usr/local/anaconda/bin/python”

os.environ[“PYSPARK_DRIVER_PYTHON”] = “/usr/local/anaconda/bin/python”

sys.path.insert(0, os.environ[“PYLIB”] +"/py4j-0.10.7-src.zip")

sys.path.insert(0, os.environ[“PYLIB”] +"/pyspark.zip")

#from pyspark.sql.types import *

from pyspark.sql import SparkSession

spark = SparkSession

.Builder()

.appName(‘Spark Dataframe’)

.getOrCreate()

sc=spark.sparkContext

But it is throwing foll error:

SPARK_MAJOR_VERSION is set to 2, using Spark2

Traceback (most recent call last):

File “/home/pratik58892973/word_count.py”, line 14, in

.appName(‘Spark Dataframe’)

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/sql/session.py”, line 173, in getOrCreate

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/context.py”, line 363, in getOrCreate

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/context.py”, line 129, in init

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/context.py”, line 312, in _ensure_initialized

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/java_gateway.py”, line 46, in launch_gateway

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/java_gateway.py”, line 60, in _launch_gateway

File “/usr/lib64/python2.7/UserDict.py”, line 23, in getitem

raise KeyError(key)

KeyError: ‘PYSPARK_GATEWAY_SECRET’

@sgiri Can you please help me in solving this error?

The following is my code of a python file:

import os

import sys

os.environ[“SPARK_HOME”] = “/usr/spark2.3/”

os.environ[“PYLIB”] = os.environ[“SPARK_HOME”] + “/python/lib”

os.environ[“PYSPARK_PYTHON”] = “/usr/local/anaconda/bin/python”

os.environ[“PYSPARK_DRIVER_PYTHON”] = “/usr/local/anaconda/bin/python”

sys.path.insert(0, os.environ[“PYLIB”] +"/py4j-0.10.7-src.zip")

sys.path.insert(0, os.environ[“PYLIB”] +"/pyspark.zip")

from pyspark.sql import SparkSession

spark = SparkSession

.builder

.master(“local”)

.config(‘some.config.some.option’,‘some-value’)

.appName(‘RatingsHistogram’)

.getOrCreate()

sc = spark.sparkContext

lines = sc.textFile("/user/pratik58892973//ml-100k/u.data")

ratings = lines.map(lambda x: x.split()[2])

result = ratings.countByValue()

sortedResults = collections.OrderedDict(sorted(result.items()))

for key, value in sortedResults.items():

print("%s %i" % (key, value))

What error are you getting?

/user/pratik58892973//ml-100k/u.dat

There seems to be an extra slash.

I’m getting foll error:

File “/home/pratik58892973/ratings_code.py”, line 15, in

.appName(‘RatingsHistogram’)

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/sql/session.py”, line 173, in getOrCreate

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/context.py”, line 363, in getOrCreate

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/context.py”, line 129, in init

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/context.py”, line 312, in _ensure_initialized

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/java_gateway.py”, line 46, in launch_gateway

File “/usr/spark2.3//python/lib/pyspark.zip/pyspark/java_gateway.py”, line 60, in _launch_gateway

File “/usr/lib64/python2.7/UserDict.py”, line 23, in getitem

raise KeyError(key)

KeyError: ‘PYSPARK_GATEWAY_SECRET’