Below is stack trace for Spark-Shell:

[ravitejarockon1712@ip-172-31-60-179 scripts]$ ./spark-2.0.sh

Ivy Default Cache set to: /home/ravitejarockon1712/.ivy2/cache

The jars for the packages stored in: /home/ravitejarockon1712/.ivy2/jars

:: loading settings :: url = jar:file:/usr/spark2.0.1/jars/ivy-2.4.0.jar!/org/apache/ivy/core/settings/ivysettings.xml

com.databricks#spark-xml_2.11 added as a dependency

com.databricks#spark-csv_2.11 added as a dependency

com.databricks#spark-avro_2.11 added as a dependency

graphframes#graphframes added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent;1.0

confs: [default]

found com.databricks#spark-xml_2.11;0.4.1 in central

found com.databricks#spark-csv_2.11;1.5.0 in central

found org.apache.commons#commons-csv;1.1 in central

found com.univocity#univocity-parsers;1.5.1 in central

found com.databricks#spark-avro_2.11;3.2.0 in central

found org.slf4j#slf4j-api;1.7.5 in local-m2-cache

found org.apache.avro#avro;1.7.6 in local-m2-cache

found org.codehaus.jackson#jackson-core-asl;1.9.13 in list

found org.codehaus.jackson#jackson-mapper-asl;1.9.13 in list

found com.thoughtworks.paranamer#paranamer;2.3 in list

found org.xerial.snappy#snappy-java;1.0.5 in local-m2-cache

found org.apache.commons#commons-compress;1.4.1 in list

found org.tukaani#xz;1.0 in list

found graphframes#graphframes;0.5.0-spark2.1-s_2.11 in spark-packages

found com.typesafe.scala-logging#scala-logging-api_2.11;2.1.2 in central

found com.typesafe.scala-logging#scala-logging-slf4j_2.11;2.1.2 in central

found org.scala-lang#scala-reflect;2.11.0 in central

found org.slf4j#slf4j-api;1.7.7 in local-m2-cache

:: resolution report :: resolve 4959ms :: artifacts dl 281ms

:: modules in use:

com.databricks#spark-avro_2.11;3.2.0 from central in [default]

com.databricks#spark-csv_2.11;1.5.0 from central in [default]

com.databricks#spark-xml_2.11;0.4.1 from central in [default]

com.thoughtworks.paranamer#paranamer;2.3 from list in [default]

com.typesafe.scala-logging#scala-logging-api_2.11;2.1.2 from central in [default]

com.typesafe.scala-logging#scala-logging-slf4j_2.11;2.1.2 from central in [default]

com.univocity#univocity-parsers;1.5.1 from central in [default]

graphframes#graphframes;0.5.0-spark2.1-s_2.11 from spark-packages in [default]

org.apache.avro#avro;1.7.6 from local-m2-cache in [default]

org.apache.commons#commons-compress;1.4.1 from list in [default]

org.apache.commons#commons-csv;1.1 from central in [default]

org.codehaus.jackson#jackson-core-asl;1.9.13 from list in [default]

org.codehaus.jackson#jackson-mapper-asl;1.9.13 from list in [default]

org.scala-lang#scala-reflect;2.11.0 from central in [default]

org.slf4j#slf4j-api;1.7.7 from local-m2-cache in [default]

org.tukaani#xz;1.0 from list in [default]

org.xerial.snappy#snappy-java;1.0.5 from local-m2-cache in [default]

:: evicted modules:

org.slf4j#slf4j-api;1.7.5 by [org.slf4j#slf4j-api;1.7.7] in [default]

org.slf4j#slf4j-api;1.6.4 by [org.slf4j#slf4j-api;1.7.5] in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 19 | 2 | 2 | 2 || 17 | 0 |

---------------------------------------------------------------------

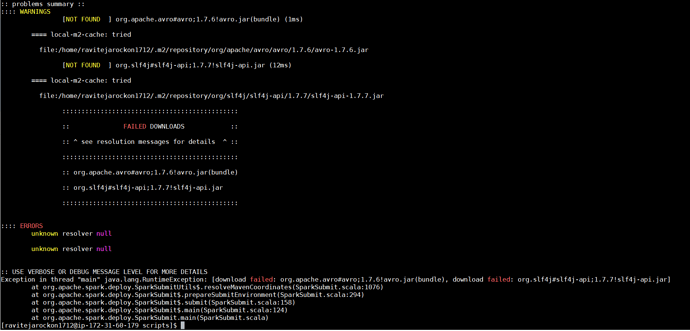

:: problems summary ::

:::: WARNINGS

[NOT FOUND ] org.apache.avro#avro;1.7.6!avro.jar(bundle) (1ms)

==== local-m2-cache: tried

file:/home/ravitejarockon1712/.m2/repository/org/apache/avro/avro/1.7.6/avro-1.7.6.jar

[NOT FOUND ] org.slf4j#slf4j-api;1.7.7!slf4j-api.jar (12ms)

==== local-m2-cache: tried

file:/home/ravitejarockon1712/.m2/repository/org/slf4j/slf4j-api/1.7.7/slf4j-api-1.7.7.jar

::::::::::::::::::::::::::::::::::::::::::::::

:: FAILED DOWNLOADS ::

:: ^ see resolution messages for details ^ ::

::::::::::::::::::::::::::::::::::::::::::::::

:: org.apache.avro#avro;1.7.6!avro.jar(bundle)

:: org.slf4j#slf4j-api;1.7.7!slf4j-api.jar

::::::::::::::::::::::::::::::::::::::::::::::

:::: ERRORS

unknown resolver null

unknown resolver null

:: USE VERBOSE OR DEBUG MESSAGE LEVEL FOR MORE DETAILS

Exception in thread “main” java.lang.RuntimeException: [download failed: org.apache.avro#avro;1.7.6!avro.jar(bundle), download failed: org.slf4j#slf4j-api;1.7.7!slf4j-api.jar]

at org.apache.spark.deploy.SparkSubmitUtils$.resolveMavenCoordinates(SparkSubmit.scala:1076)

at org.apache.spark.deploy.SparkSubmit$.prepareSubmitEnvironment(SparkSubmit.scala:294)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:158)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

PySpark Stack Trace:

/usr/spark2.0.1/bin/pyspark

Python 2.7.14 |Anaconda custom (64-bit)| (default, Oct 16 2017, 17:29:19)

[GCC 7.2.0] on linux2

Type “help”, “copyright”, “credits” or “license” for more information.

Ivy Default Cache set to: /home/ravitejarockon1712/.ivy2/cache

The jars for the packages stored in: /home/ravitejarockon1712/.ivy2/jars

:: loading settings :: url = jar:file:/usr/spark2.0.1/jars/ivy-2.4.0.jar!/org/apache/ivy/core/settings/ivysettings.xml

com.databricks#spark-xml_2.11 added as a dependency

com.databricks#spark-csv_2.11 added as a dependency

com.databricks#spark-avro_2.11 added as a dependency

graphframes#graphframes added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent;1.0

confs: [default]

found com.databricks#spark-xml_2.11;0.4.1 in central

found com.databricks#spark-csv_2.11;1.5.0 in central

found org.apache.commons#commons-csv;1.1 in central

found com.univocity#univocity-parsers;1.5.1 in central

found com.databricks#spark-avro_2.11;3.2.0 in central

found org.slf4j#slf4j-api;1.7.5 in local-m2-cache

found org.apache.avro#avro;1.7.6 in local-m2-cache

found org.codehaus.jackson#jackson-core-asl;1.9.13 in list

found org.codehaus.jackson#jackson-mapper-asl;1.9.13 in list

found com.thoughtworks.paranamer#paranamer;2.3 in list

found org.xerial.snappy#snappy-java;1.0.5 in local-m2-cache

found org.apache.commons#commons-compress;1.4.1 in list

found org.tukaani#xz;1.0 in list

found graphframes#graphframes;0.5.0-spark2.1-s_2.11 in spark-packages

found com.typesafe.scala-logging#scala-logging-api_2.11;2.1.2 in central

found com.typesafe.scala-logging#scala-logging-slf4j_2.11;2.1.2 in central

found org.scala-lang#scala-reflect;2.11.0 in central

found org.slf4j#slf4j-api;1.7.7 in local-m2-cache

:: resolution report :: resolve 5183ms :: artifacts dl 272ms

:: modules in use:

com.databricks#spark-avro_2.11;3.2.0 from central in [default]

com.databricks#spark-csv_2.11;1.5.0 from central in [default]

com.databricks#spark-xml_2.11;0.4.1 from central in [default]

com.thoughtworks.paranamer#paranamer;2.3 from list in [default]

com.typesafe.scala-logging#scala-logging-api_2.11;2.1.2 from central in [default]

com.typesafe.scala-logging#scala-logging-slf4j_2.11;2.1.2 from central in [default]

com.univocity#univocity-parsers;1.5.1 from central in [default]

graphframes#graphframes;0.5.0-spark2.1-s_2.11 from spark-packages in [default]

org.apache.avro#avro;1.7.6 from local-m2-cache in [default]

org.apache.commons#commons-compress;1.4.1 from list in [default]

org.apache.commons#commons-csv;1.1 from central in [default]

org.codehaus.jackson#jackson-core-asl;1.9.13 from list in [default]

org.codehaus.jackson#jackson-mapper-asl;1.9.13 from list in [default]

org.scala-lang#scala-reflect;2.11.0 from central in [default]

org.slf4j#slf4j-api;1.7.7 from local-m2-cache in [default]

org.tukaani#xz;1.0 from list in [default]

org.xerial.snappy#snappy-java;1.0.5 from local-m2-cache in [default]

:: evicted modules:

org.slf4j#slf4j-api;1.7.5 by [org.slf4j#slf4j-api;1.7.7] in [default]

org.slf4j#slf4j-api;1.6.4 by [org.slf4j#slf4j-api;1.7.5] in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 19 | 2 | 2 | 2 || 17 | 0 |

---------------------------------------------------------------------

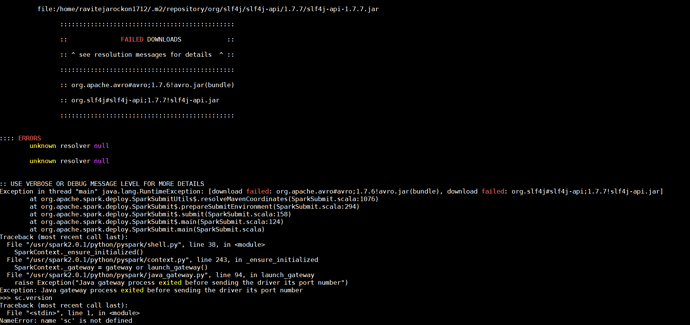

:: problems summary ::

:::: WARNINGS

[NOT FOUND ] org.apache.avro#avro;1.7.6!avro.jar(bundle) (1ms)

==== local-m2-cache: tried

file:/home/ravitejarockon1712/.m2/repository/org/apache/avro/avro/1.7.6/avro-1.7.6.jar

[NOT FOUND ] org.slf4j#slf4j-api;1.7.7!slf4j-api.jar (9ms)

==== local-m2-cache: tried

file:/home/ravitejarockon1712/.m2/repository/org/slf4j/slf4j-api/1.7.7/slf4j-api-1.7.7.jar

::::::::::::::::::::::::::::::::::::::::::::::

:: FAILED DOWNLOADS ::

:: ^ see resolution messages for details ^ ::

::::::::::::::::::::::::::::::::::::::::::::::

:: org.apache.avro#avro;1.7.6!avro.jar(bundle)

:: org.slf4j#slf4j-api;1.7.7!slf4j-api.jar

::::::::::::::::::::::::::::::::::::::::::::::

:::: ERRORS

unknown resolver null

unknown resolver null

:: USE VERBOSE OR DEBUG MESSAGE LEVEL FOR MORE DETAILS

Exception in thread “main” java.lang.RuntimeException: [download failed: org.apache.avro#avro;1.7.6!avro.jar(bundle), download failed: org.slf4j#slf4j-api;1.7.7!slf4j-api.jar]

at org.apache.spark.deploy.SparkSubmitUtils$.resolveMavenCoordinates(SparkSubmit.scala:1076)

at org.apache.spark.deploy.SparkSubmit$.prepareSubmitEnvironment(SparkSubmit.scala:294)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:158)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Traceback (most recent call last):

File “/usr/spark2.0.1/python/pyspark/shell.py”, line 38, in

SparkContext._ensure_initialized()

File “/usr/spark2.0.1/python/pyspark/context.py”, line 243, in _ensure_initialized

SparkContext._gateway = gateway or launch_gateway()

File “/usr/spark2.0.1/python/pyspark/java_gateway.py”, line 94, in launch_gateway

raise Exception(“Java gateway process exited before sending the driver its port number”)

Exception: Java gateway process exited before sending the driver its port number

sc.version

Traceback (most recent call last):

File “”, line 1, in

NameError: name ‘sc’ is not defined

exit()