Hello,

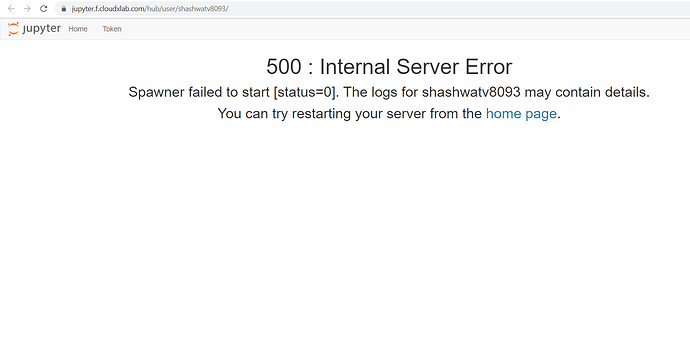

I am unable to access the Jupyter notebook on CloudXlab. Please check and resolve my access at the earliest.

Thanks!

Hello,

I have gone through the link:

My User Disk Space Quota in the lab has exceeded. How can I clean the unnecessary files? - CloudxLab Discussions

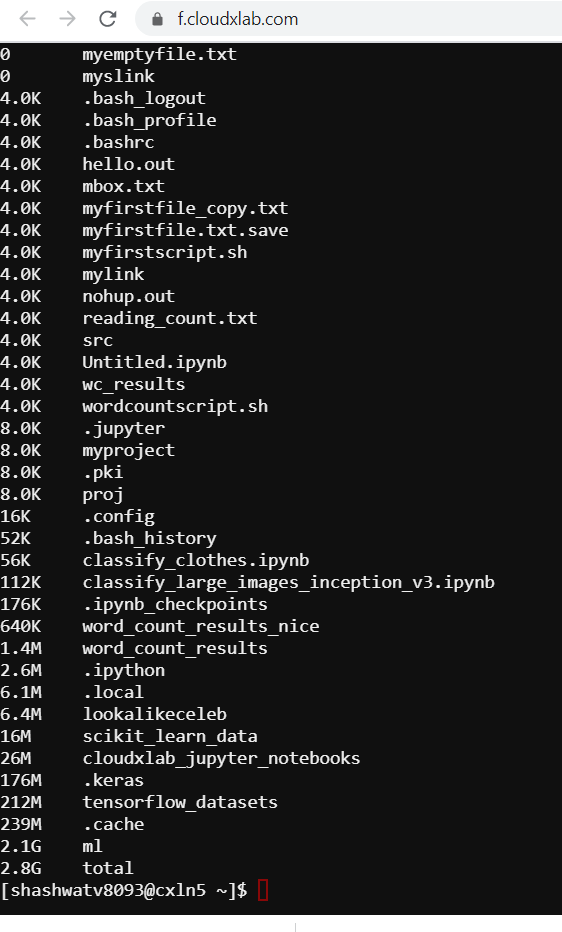

But the main issue is that I cannot see my files/folder and so do not know which files to take back-up before deleting them?

Also, there were 2 projects from Deep Learning that I had uploaded the final file on Jupyter notebook home page outside folder. But the data sets for them were uploaded and saved at different location. Those files were very heavy as far as I recollect. So do you think it would be good to delete those data as well? If yes, please help.

Can we have small video call to go over these to help resolve and avoid any confusion or to prevent any important files from getting deleted?

Appreciate your help and patience!

Thanks & regards

You can try the following command to view the hidden files:

ls -al

Also, you will find most of your files inside the cloudxlab_jupyter_notebooks folder. As for the heavy files, if you are not using them, would request you to delete them.

Hi CloudXlab Team,

I have managed to reduce the data to less than 3 GB but still I am unable to access the lab. Can you please check and resolve asap?

Also from console I can see that cache has 239 M data. How can I delete this from Console?

I have deleted files to the best of my knowledge but still I see that ‘ml’ directory has 2.1 GB data. I am not sure what files can I delete so that I do not mess-up things. I would like your support here as I am sure many folks would have faced the same situation before.

Please help urgently as the time is getting wasted just to trouble-shoot this!

Thanks,

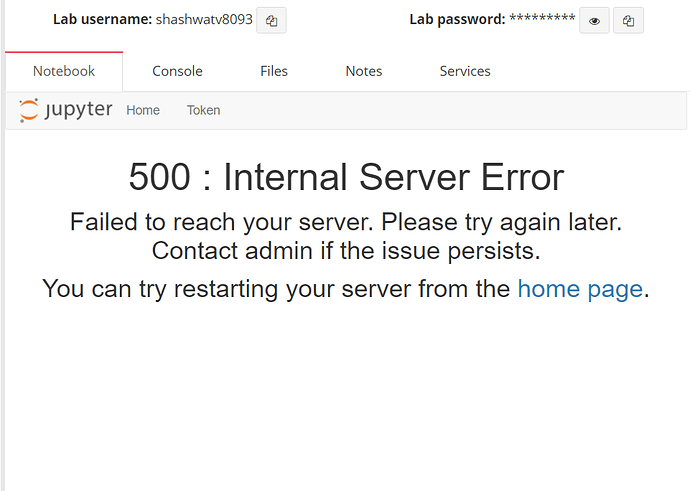

I checked your lab from my end, it seems to be working on my end. Could you please recheck once and let me know if you are still facing this issue? Thanks.

Hi,

I have just checked it. It is now working fine.

Thanks for your help!