Hi Sandeep,

As your instruction, I run the below commands in web console and can not start a Jupyter notebook to run pyspark.

“Start Jupyter using following commands:

export SPARK_HOME=”/usr/spark2.0.1/"

export PYTHONPATH=$SPARK_HOME/python/:$SPARK_HOME/python/lib/py4j-0.10.3-src.zip:$SPARK_HOME/python/lib/pyspark.zip:$PYTHONPATH

export PATH=/usr/local/anaconda/bin:$PATH

jupyter notebook --no-browser --ip 0.0.0.0 --port 8888"

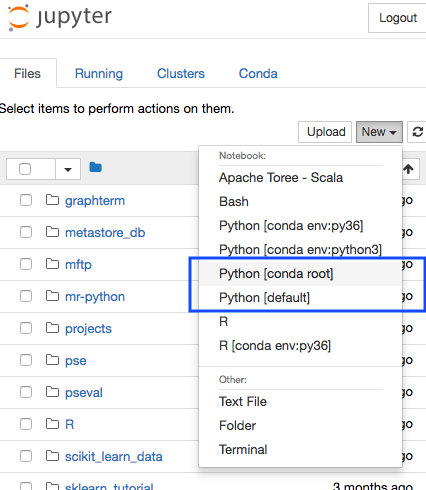

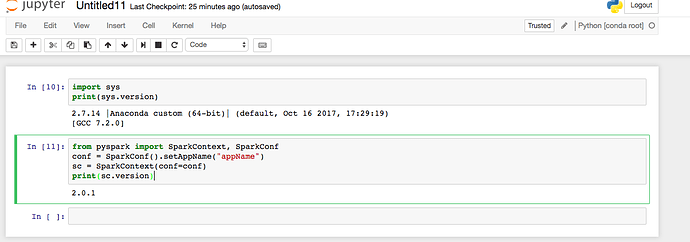

So I open another Jupyter Notebook and try to run the following codes and with some errors:

“from pyspark import SparkContext, SparkConf

conf = SparkConf().setAppName(“appName”)

sc = SparkContext(conf=conf)

rdd = sc.textFile(”/data/mr/wordcount/input/")

print rdd.take(10)

sc.version"

“ModuleNotFoundError: No module named ‘pyspark’”

How can I run Pyspark within Jupyter? thanks.