Not able to create parquet file, due to permission denied error.

Currently i have given permission “rwxrwxrwx” to folders in HDFS.

Could you share the code? Chances are that you are accessing a file which you don’t have permission to.

DF.write.mode(“Overwrite”).parquet(“home/username/”)

Please respond to respective query

DF.write.mode(“Overwrite”).parquet(“home/username/”)

When the filename does not start with “/” it is called a relative path else if it started with “/” it is absolute.

If it is relative, it tries to search the file or folder in the home directory of user.

So “home/usename” is equal to /home/sandeepgiri/home/username if my home directory is /home/sandeepgiri.

I would strongly suggest going thru linux basics: https://cloudxlab.com/assessment/playlist-intro/2/linux-basics

Thanks for quick response.

But error here I am facing is “Permission denied” not “directory not found”.

And I tried all other combinations such as with slash, without slash…etc

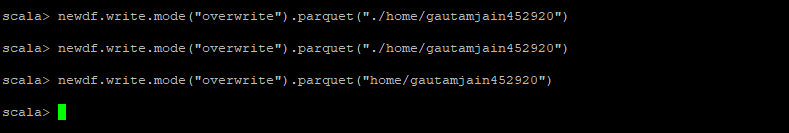

i have tried these combinations, it is not giving exception but parquet file is not being generated in any of the mentioned path.

Can you try the following:

newdf.write.mode("overwrite").parquet("mynewdf");

Also “./home/gautam” and “home/gautam” are same as “/home/yourlogin/home/gautam”.

Again, I would suggest that understand the difference between the relative and absolute paths and the meaning of “.”, “/” and “./”.

Try experimenting with files/folders in linux using the following set of commands:

mkdir

cd

pwd

ls

touchNot working. Tried all kind of paths

Please share full code and the steps. Let me look into it.

import org.apache.spark.sql.SQLContext

val sqlcontext = new org.apache.spark.sql.SQLContext(sc)

val dataframe_mysql = sqlcontext.read.format(“jdbc”).option(“url”, “jdbc:mysql://ip-172-31-20-247/retail_db”).option(“driver”, “com.mysql.jdbc.Driver”).option(“dbtable”, “uber”).option(“user”, “sqoopuser”).option(“password”, “NHkkP876rp”).load()

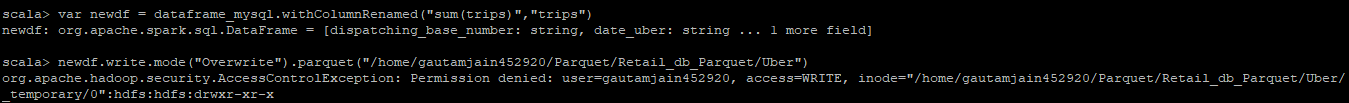

var newdf = dataframe_mysql.withColumnRenamed(“sum(trips)”,“Trips”)

newdf.write.mode(“overwrite”).parquet("/Parquet/Retail_db_Parquet/Uber/")

newdf.write.mode(“overwrite”).parquet("./Parquet/Retail_db_Parquet/Uber/")

newdf.write.mode(“overwrite”).parquet(".Parquet/Retail_db_Parquet/Uber/")

newdf.write.mode(“overwrite”).parquet(".Parquet/Retail_db_Parquet/Uber")

newdf.write.mode(“overwrite”).parquet(“home/gautamjain452920/Parquet/Retail_db_Parquet/Uber”)

newdf.write.mode(“overwrite”).parquet("/home/gautamjain452920/Parquet/Retail_db_Parquet/Uber")

Try a dataframe_mysql.show() here to ensure that you are able to access database. Also, I am assuming you are using the SBT because on the spark-shell we don’t need to create SQLContext.

try newdf.show() to test if you are able to access. Also, please use the spark-shell before creating the full-fledged scala program.

This one looks for “Parquet” in the root directory which you would not have access to.

This one expects that parent folder path “Parquet/Retail_db_Parquet” is already created in your HDFS but “Uber” must not be created. So, either just try:

newdf.write.mode(“overwrite”).parquet("Uber")

or first, create the parent folder structure like this:

hadoop fs -mkdir Parquet/Retail_db_Parquet

Please get comfortable with linux/unix file path nomenclature.