If yes then all my work will be lost then how will i claim my certification??

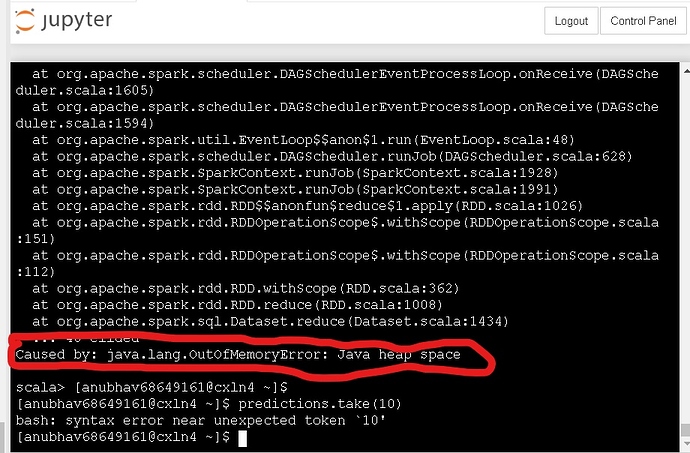

I am currently on the MACHINE LEARNING WITH SPARK and doing the handson which is provided in the vedio but getting the above error…

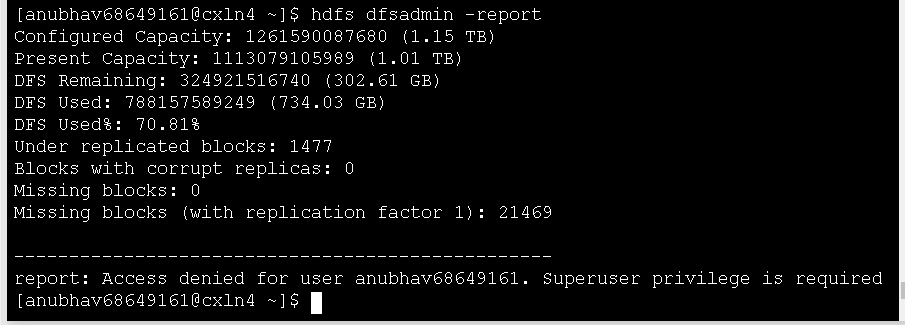

the above error is showing up but i have still 300GB of the disk size left as shown in the image attached .

please increase the disc space as we have to practice also…