Hi Jinesh,

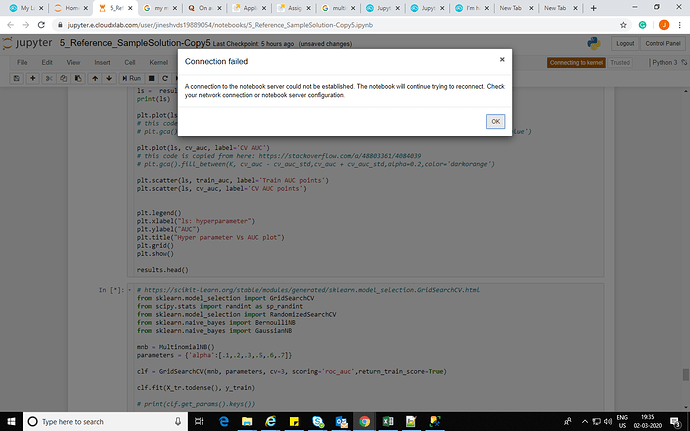

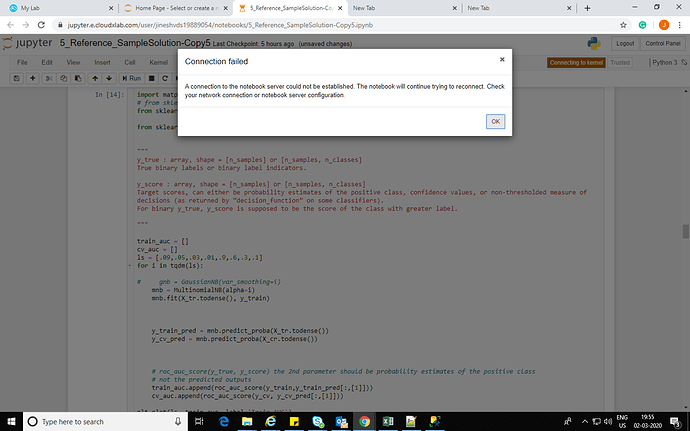

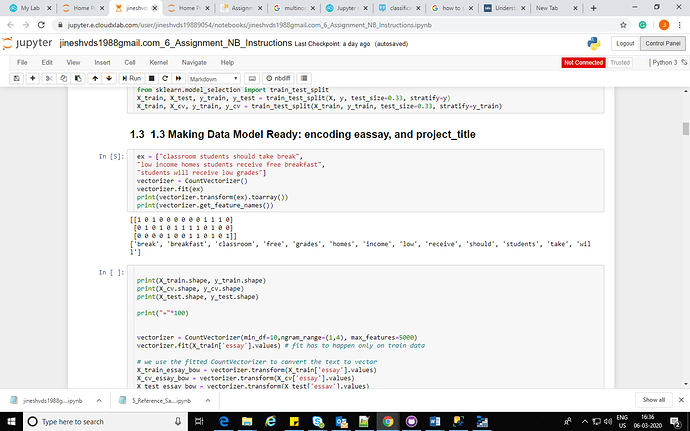

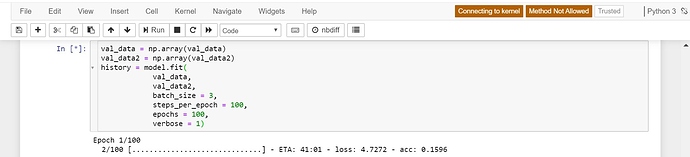

As we can see in your notebook screenshot, whenever you are doing hyperparameter tuning, it is getting disconnected.

The hyperparameter tuning is a very intensive process.

Let me give a simple breakup:

If the training is taking 1 minute and infering is taking .25 minutes, the cross validation of 10 folds will take 10*1.25 = 12.5 minutes.

Now, if there are three hyper parameters with three different values each, the cross validation will be called 3*3*3 i.e. 27 times. Therefore the complete hyperparameter search/tuning will take 27*12.5 minutes i.e. 337.5 minutes which 5.625 hours.

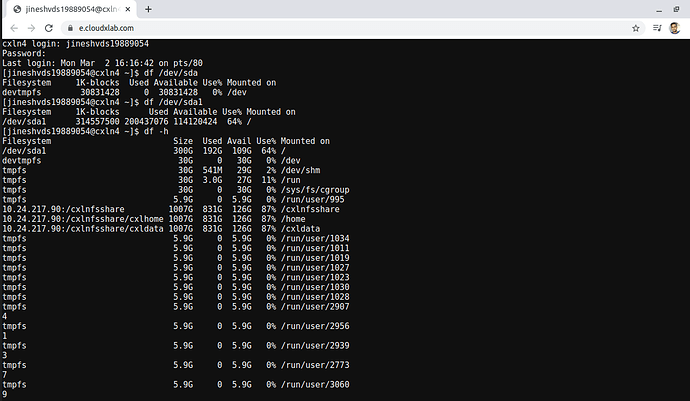

So, you can see that the hyper parameter search takes a huge toll on time. Also, please note that during hyperparameter tuning, it will be using 100% of CPU.

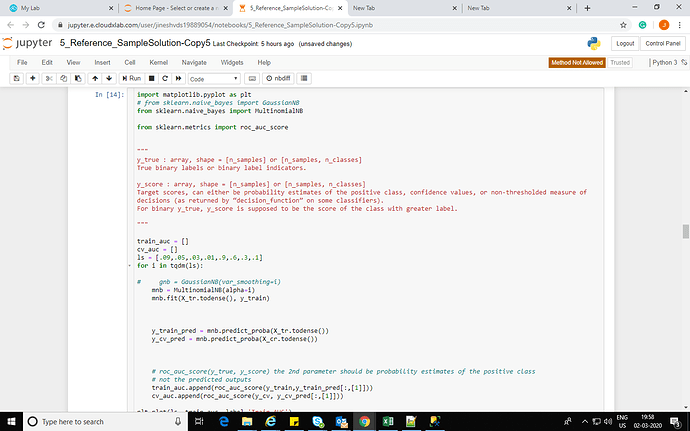

Currently, the fair usage policy of CloudxLab allows 1 hour of Jupyter active time and 2GB of RAM. Therefore, the process will get killed. I would suggest using less number of hyperparameters in hyperparameter tuning.

I hope I was able to explain.