I am currently working on proposing data warehousing solution using Hadoop eco systems.

- Data Ingestion ( Data source - Structured, unstructured, Feed etc) - NFI tool

2, Data Lake - HDFS - Data shore - HDFS( Processed data?)

- Data Processing - Spark

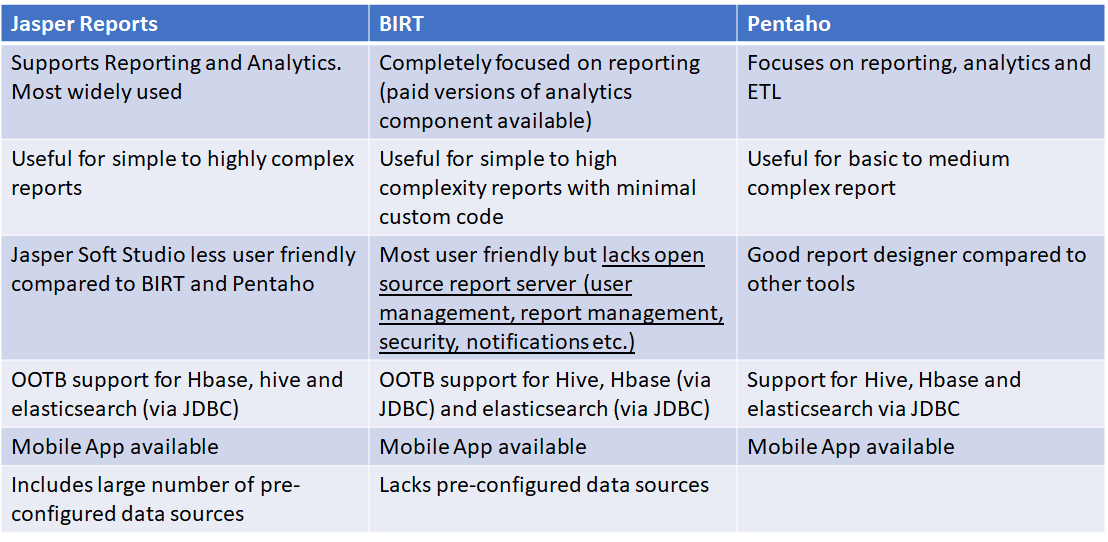

- BI - Apache Zeppelin/BIRT(for reports/dashboard, real time reports etc)

there is need of master data management, shall I consider HDFS itself for this purpose or should I consider another DB for final data storage( target data wareshouse system).

Let me know above architecture tools are good enough? or if any suggestions would be greatly appreciated.

Thanks,

Srinivasan