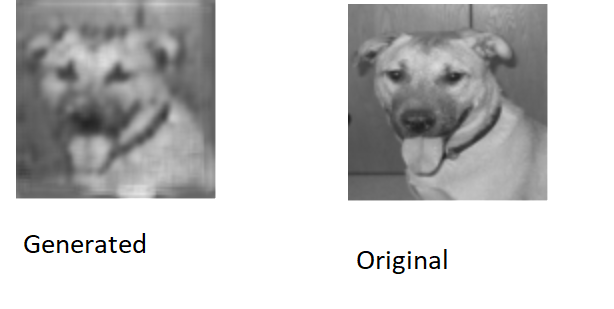

for CNN encoder-decoder my MSE loss is very low (Loss: tensor(0.0067, grad_fn=))

but still output images are not clear .

my network architecture is

Layer (type) Output Shape Param #

Conv2d-1 [-1, 6, 98, 98] 60

Conv2d-2 [-1, 10, 96, 96] 550

Conv2d-3 [-1, 20, 94, 94] 1,820

Conv2d-4 [-1, 9, 92, 92] 1,629

MaxPool2d-5 [[-1, 9, 46, 46], [-1, 9, 46, 46]] 0

MaxPool2d-6 [[-1, 9, 23, 23], [-1, 9, 23, 23]] 0

Conv2d-7 [-1, 9, 23, 23] 738

MaxPool2d-8 [[-1, 9, 11, 11], [-1, 9, 11, 11]] 0

MaxUnpool2d-9 [-1, 9, 23, 23] 0

Conv2d-10 [-1, 9, 23, 23] 738

MaxUnpool2d-11 [-1, 9, 46, 46] 0

MaxUnpool2d-12 [-1, 9, 92, 92] 0

Conv2d-13 [-1, 9, 92, 92] 738

Conv2d-14 [-1, 20, 94, 94] 1,640

Conv2d-15 [-1, 10, 96, 96] 1,810

Conv2d-16 [-1, 6, 98, 98] 546

Conv2d-17 [-1, 1, 100, 100] 55

================================================================

Total params: 10,324

Trainable params: 10,324

Non-trainable params: 0

images:

Please let me know what other parameters I can tweak so that image will be clear

lr = 0.01 , epochs = 10