Hi,

I was attempting the CAT vs Non Cat classifier assignment.

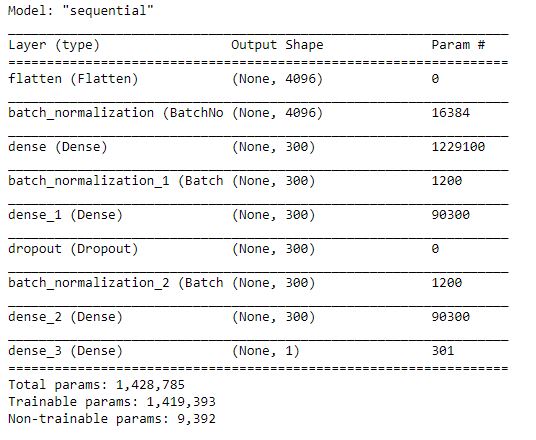

I tried using DNN alone after converting the images to Gray scale and normalizing the same.

The results (loss and accuracy for train and validation) when trained was quite fluctuating and was not settling down.

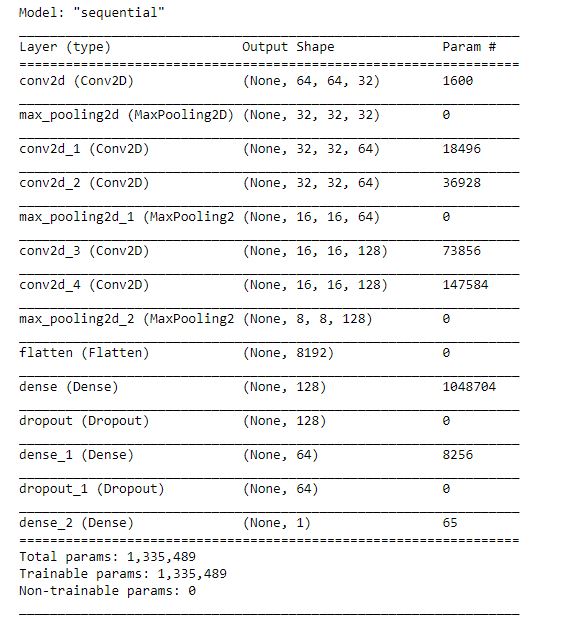

To ensure I try other options, I did try using CNN as well, and I see the val accuracy around 0.697 odd and test accuracy is around 0.66. But when run on test dataset, it is too low at 0.34

I tried using BW image as well and I still see the values similar to this.

Not sure where I am going wrong. Appreciate if you could help.

I have the source file under my “cloudxlab_jupyter_notebooks” directory under the file name as “cat_vs_noncat_classifier.ipynb”.

(https://jupyter.e.cloudxlab.com/user/preedesh2010/tree/cloudxlab_jupyter_notebooks/cat_vs_noncat_classifier.ipynb)"

Each of these strategies as mentioned above (using CNN on original normalized image, using CNN but normalized Black and White image and using DNN have been segregated in the code and I have tried putting sufficient comments