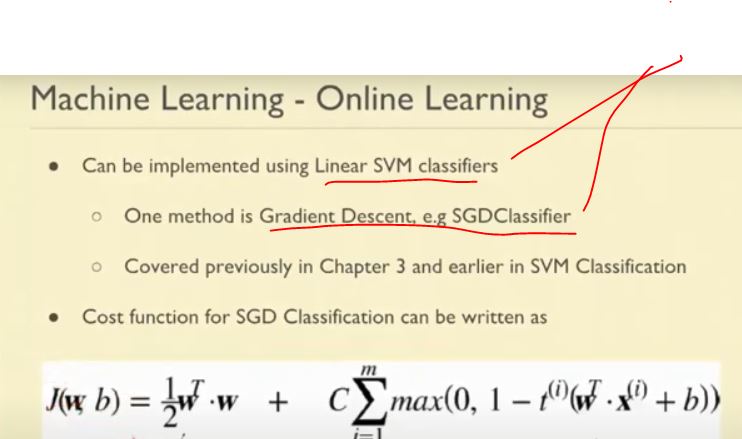

In the aforesaid PPT Template, there are 2 statements used i.e.

a) 1st line —Tells us about Support Vector Machines

b) 2nd line tells us about Gradient Descent

I didn’t understand the above 2 lines. Are these 2 statements complementing or contradicting each other? Kindly clarify.